Master Web Scraping: Unlock the Power of Beautiful Soup with Python

Web scraping is a transformative skill for Python developers—it’s like having a tireless assistant who can fetch and organize endless data for you, minus the coffee breaks! Beautiful Soup, one of Python’s most-loved libraries, makes scraping and parsing HTML and XML data remarkably straightforward. In this comprehensive guide, you'll build robust web scrapers step-by-step, empowering you to automate data extraction effortlessly.

What You'll Master

- Setting up your Python environment

- Fetching web pages reliably using

requests - Efficient HTML parsing with Beautiful Soup

- Extracting actionable data from parsed HTML

- Troubleshooting common scraping challenges

- Advanced scraping strategies

Step 1: Set Up Your Python Environment

Start by ensuring Python is installed. Next, quickly install Beautiful Soup and Requests:

pip install beautifulsoup4 requests

Troubleshooting Tip:

- Installation Troubles: If the installation fails, make sure you're using Python 3.x and update your pip (

pip install --upgrade pip). A clean and updated environment solves most installation headaches.

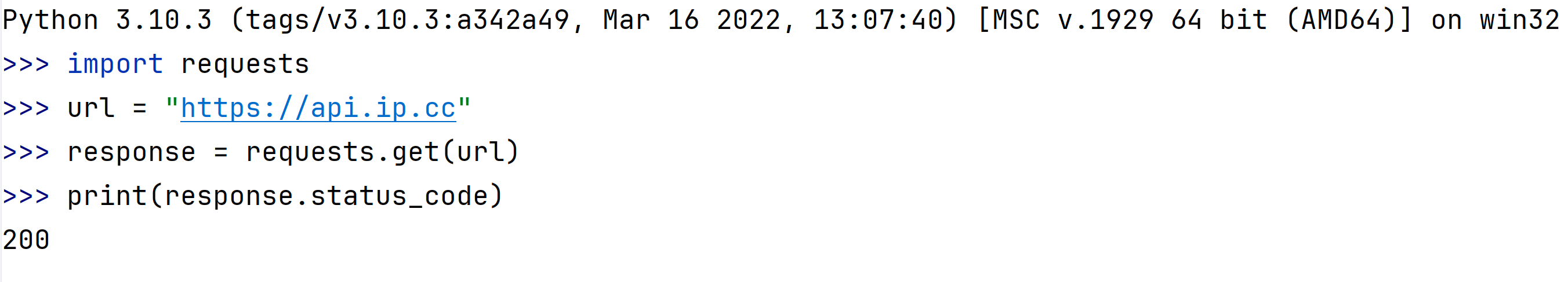

Step 2: Reliably Fetching Web Pages

Fetch web pages easily with the powerful requests library:

import requests

url = "https://example.com"

response = requests.get(url)

print(response.status_code)

A status of 200 means you've successfully fetched the page!

Troubleshooting Tips:

- Incorrect URLs: Double-check the URL spelling and structure.

- Blocked Requests: Websites often block obvious scraper bots. Add custom headers or use proxies to appear more human.

Pro Tip: Using proxies is a game changer—making your scraper harder to detect and dramatically increasing your success rate.

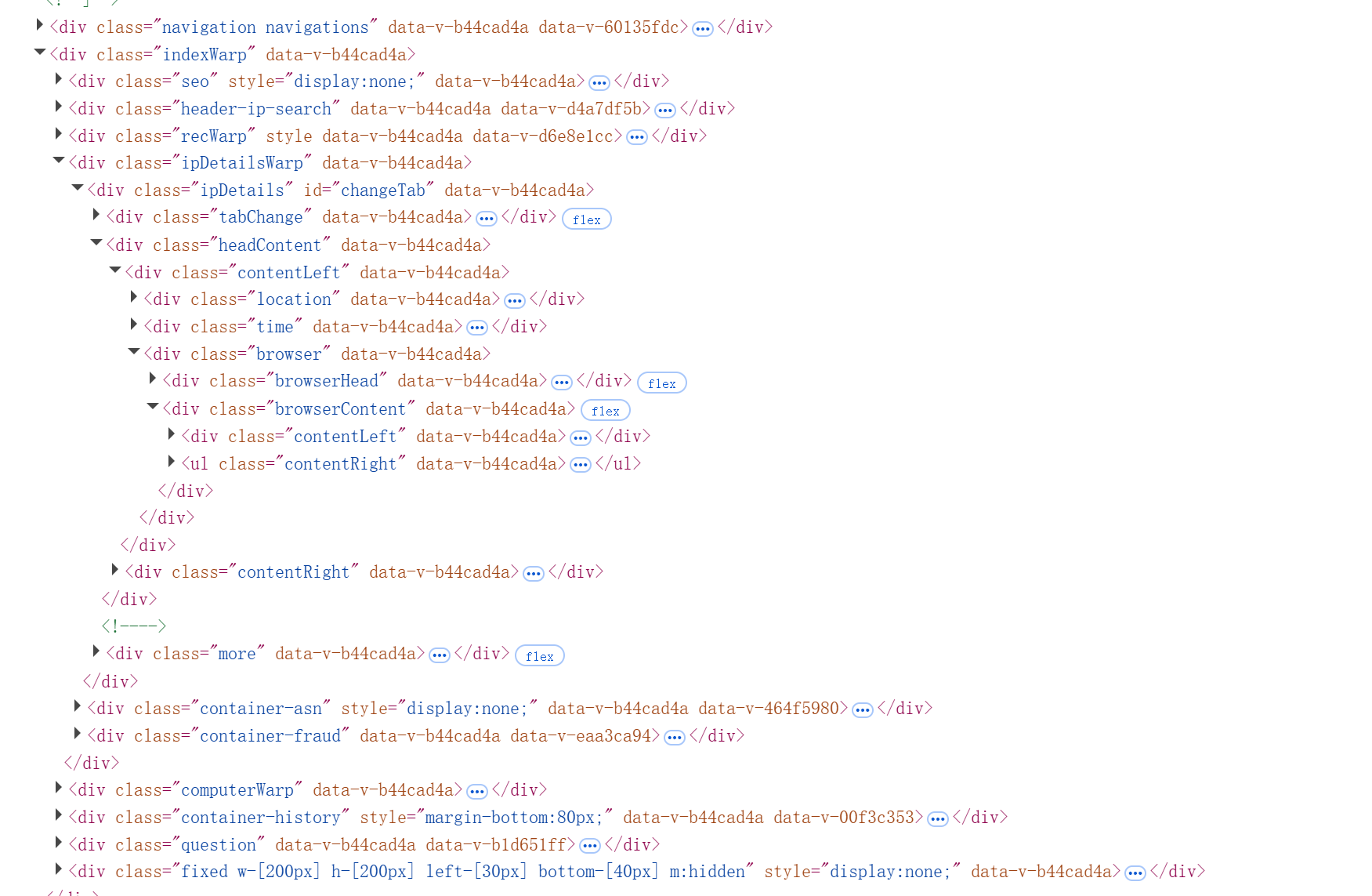

Step 3: HTML Parsing with Precision

Transform messy HTML into structured data with Beautiful Soup:

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

print(soup.prettify())

Beautiful Soup turns chaos into structured beauty!

Troubleshooting Tip:

- Parser Choices: If the parsed content doesn't look right, experiment with other parsers like

'lxml'or'html5lib'.

Step 4: Extracting Data like a Pro

Example 1: Basic Data Extraction

Quickly grab all links (<a> tags):

links = soup.find_all('a')

for link in links:

href = link.get('href')

text = link.text.strip()

print(f"Link Text: {text}, URL: {href}")

Easy and effective!

Example 2: Advanced Data Extraction

Now for a more sophisticated example—scraping headlines and summaries:

response = requests.get("https://news.example.com")

soup = BeautifulSoup(response.text, 'html.parser')

articles = soup.find_all('div', class_='article')

for article in articles:

headline = article.find('h2').text.strip()

summary = article.find('p', class_='summary').text.strip()

print(f"Headline: {headline}\nSummary: {summary}\n")

Structured, insightful results every time!

Troubleshooting Tips:

- Missing Attributes: Verify elements have the attributes before extraction.

- Empty Content: Always check for and handle empty or null values.

Step 5: Tackling Common Web Scraping Challenges

Scraping isn't always straightforward, but here are proven strategies to overcome common hurdles:

- Blocked Requests: Rotate proxies and user agents frequently.

- Dynamic Content: Use Selenium or Playwright for JavaScript-heavy sites.

- Rate Limiting: Add polite pauses between requests (

time.sleep()) to prevent getting banned.

Example for adding realistic headers:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)'

}

response = requests.get(url, headers=headers)

Step 6: Advanced Web Scraping Techniques

As you advance, explore:

- CSS Selectors for precise data targeting

- Regular Expressions for sophisticated text matching

- Ethical Scraping Practices to stay compliant and respectful

Conclusion

Thank you for investing your time in this guide! You’ve equipped yourself with powerful scraping skills that can dramatically simplify data collection and analysis. Always remember—scrape responsibly, respect websites' rules, and maintain good scraping etiquette to keep data plentiful and your reputation clean.

Continue Your Journey to Scraping Mastery

Keep sharpening your skills by:

- Scraping dynamic content with Selenium

- Mastering CSS selectors and regex

- Embracing ethical scraping principles

Happy scraping—go forth confidently and scrape responsibly!